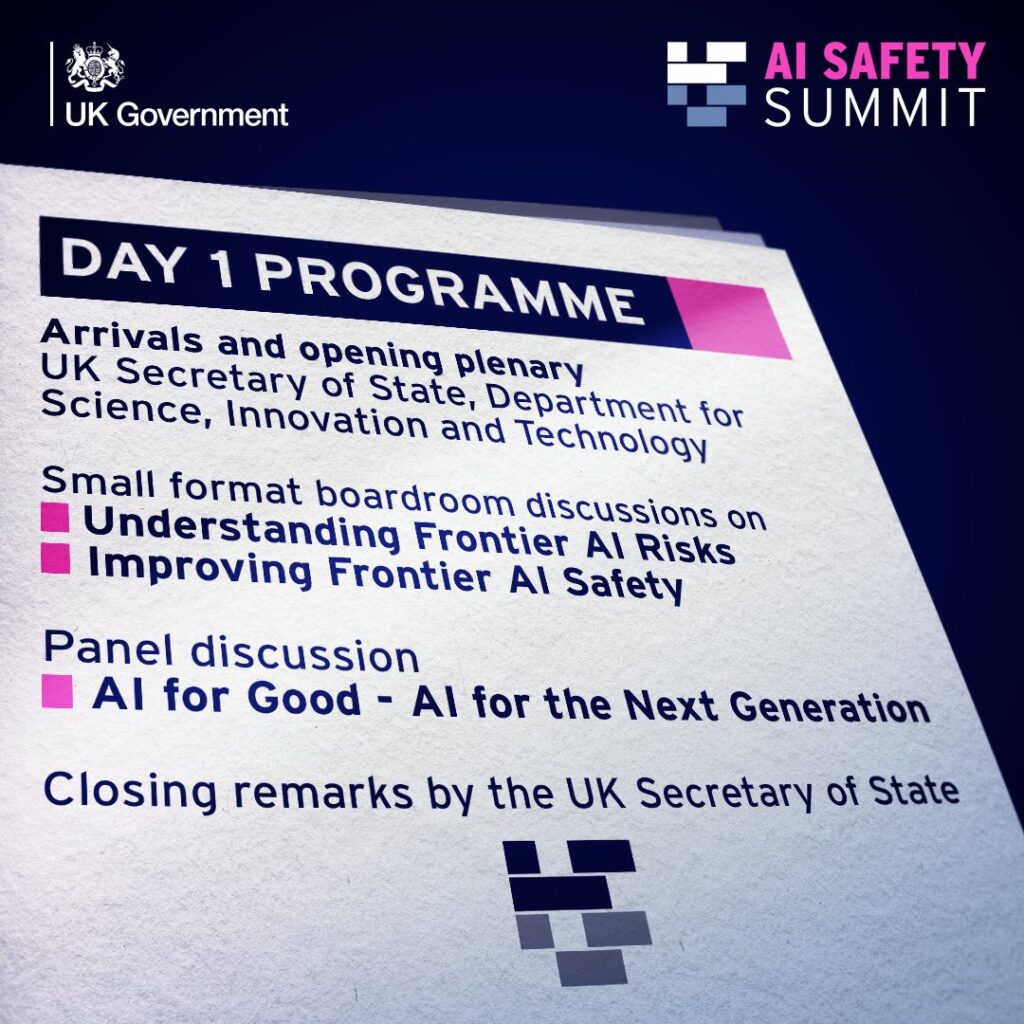

Artificial Intelligence (AI) offers remarkable prospects for transforming our economies and societies, but it also poses significant risks if not developed safely. The United Kingdom is hosting the world’s inaugural AI Safety Summit at Bletchley Park on November 1-2, a pivotal moment that will gather global leaders, businesses, academia, and civil society to chart a course for collective international action in navigating the opportunities and risks presented by cutting-edge AI technology.

The Summit comes on the heels of extensive public consultation on the proposals in the AI Regulation White Paper. This White Paper outlines the initial steps toward establishing a regulatory framework for AI that maximizes its benefits while ensuring safety and security. The UK will provide an update on its regulatory approach later this year in response to the AI Regulation White Paper consultation.

In preparation for the Summit, the UK set five key objectives to encourage and facilitate inclusive discussions among diverse stakeholders, including government, businesses, civil society, academia, and more. Given the vast opportunities and substantial risks associated with AI, it’s imperative to engage voices from various sectors.

The UK government has engaged extensively with AI companies, countries, and international organizations like the OECD, Council of Europe, G7 Hiroshima Process, and others to gain a comprehensive understanding of AI safety and associated concerns. However, the UK recognizes the valuable insights that can be drawn from its rich academic, civil society, small and medium-sized enterprises (SMEs), and voluntary and community sectors.

To ensure a wide range of voices are heard, the UK partnered with organizations like the Royal Society, the British Academy, techUK, The Alan Turing Institute, the Founders Forum, and the British Standards Institution. They conducted an inclusive public dialogue to inform global actions on AI safety. Various events were held, including roundtables with CEOs, senior leaders, venture capital firms, and think tanks, providing a platform for stakeholders to share their views on critical themes.

Throughout the journey to the Summit, the UK engaged with numerous individuals, businesses, and societal organizations. This process aimed to enhance the understanding of AI’s opportunities and risks and shape the ambitions of the AI Safety Summit. A summary of the key discussion areas is provided below.

Unlocking the Opportunities of AI:

Many participants highlighted the enormous potential of AI to boost economies, serve the public good, and enhance people’s lives. They saw AI as a tool to reduce administrative burdens in the public sector, allowing for more effective government-citizen engagement. However, the need for public awareness, continuous evaluation, and monitoring of AI applications in the public sector was emphasized. Collaborating with a broad range of stakeholders was seen as crucial to ensure AI’s use for social good aligns with public opinion.

Understanding Potential Societal Risks:

Participants acknowledged the risks associated with frontier AI addressed at the Summit and the need to better understand and manage them while promoting public trust in the technology. They also raised concerns about existing risks, such as deep fakes, disinformation, online harms, financial crime, and potential job impacts. AI’s role in exacerbating bias and discrimination was noted, highlighting the need to tackle these issues. Urgency in addressing AI-related risks was stressed, given the rapid pace of AI development.

International Leadership and Collaboration:

The UK was recognized as a leader in managing risks from emerging AI advancements. The Summit was seen as an opportunity to pool global expertise and explore common measures to address AI risks. Participants believed that the UK could differentiate itself by developing regulations that incentivize safe AI deployment. The importance of open-source AI and technical standards in promoting safe and secure AI development was emphasized. Collaborative frameworks for real-time evaluation of AI models, including Large Language Models, were also discussed.

A Role for Regulation on AI:

Participants acknowledged the central role of government in regulating AI to prevent harm. They supported an outcome-focused approach to AI regulation, focusing on managing disinformation risks and ensuring privacy and IP rights protection. The need for public input, engagement with social scientists, and addressing the risk of bias in AI was highlighted. International collaboration in establishing regulations was deemed crucial, and the role of industry-led standards development was emphasized.

Next Steps:

The AI Safety Summit aims to focus on mitigating the risks of frontier AI through international coordination. Initiatives like the Frontier AI Taskforce and the AI Safety Institute will evaluate and test new AI models, addressing various risks from bias and disinformation to cyber and bio-security. These efforts will build domestic capabilities and drive the global conversation on AI safety.