Translation in Your Ears: Google Translate Goes Live on Headphones

Google is rolling out a beta “Live translate” experience in the Google Translate app that lets you hear real-time translations through any headphones, aiming to preserve a speaker’s tone, emphasis, and cadence so conversations are easier to follow. The initial rollout is on Android in the U.S., Mexico, and India, with support for 70+ languages, and Google says it plans to expand to iOS and more countries in 2026.

Alongside that, Google is adding Gemini-powered improvements to make text translations sound more natural and handle nuanced meaning (like idioms and slang) better; this update is rolling out in the U.S. and India for translations between English and nearly 20 languages, and it’s available across Android, iOS, and web. The Translate app’s language-learning tools are also expanding to nearly 20 new countries, with upgraded speaking feedback and a streak-style tracker to encourage consistency.

Google’s Deep Research Agent Levels Up — Right as GPT-5.2 Drops

Google introduced a “reimagined” version of Gemini Deep Research, built on Gemini 3 Pro, positioning it as its most capable research agent yet. The big shift is that Deep Research isn’t only for generating reports anymore — Google is now letting developers embed its research capabilities into their own apps via a new Interactions API, aiming to give builders more control as “agentic AI” becomes the default workflow.

Google says the upgraded agent can sift and synthesize large volumes of information and handle huge context prompts, with real use cases spanning due diligence and drug-toxicity safety research. It’s also slated to be integrated into products like Google Search, Google Finance, the Gemini app, and NotebookLM, while Google highlights Gemini 3 Pro’s focus on being more “factual” and reducing hallucinations in longer, multi-step tasks. To back its claims, Google introduced (and says it open-sourced) a new benchmark called DeepSearchQA, and reported strong results on other benchmarks too — but the moment was instantly framed as part of a rivalry since OpenAI released GPT-5.2 the very same day.

Disney Draws a Hard Line on AI: Cease-and-Desist Lands on Google

Disney has sent Google a cease-and-desist letter accusing the company of “massive” copyright infringement, alleging Google’s AI models and services have been used to commercially distribute unauthorized images and videos featuring Disney-owned characters. The letter reportedly describes Google as a “virtual vending machine” that can reproduce and distribute Disney’s copyrighted works at scale, and claims some outputs even carry a Gemini logo, which Disney says could wrongly imply Disney’s approval.

The letter also names a range of Disney IP it says is being infringed—spanning titles like Frozen, The Lion King, Moana, The Little Mermaid, and Deadpool. Google didn’t confirm or deny the allegations, but said it would engage with Disney, emphasizing its long relationship with the company, that it builds AI using public web data, and pointing to copyright controls such as Google-extended and YouTube’s Content ID. The dispute surfaced the same day Disney announced a $1 billion, three-year deal with OpenAI tied to Sora.

Disco Turns Your Tabs Into Apps

Google has introduced Disco, a new Google Labs experiment that uses Gemini to help people turn what they’re browsing into custom, interactive mini web apps. The core idea is “GenTabs”: it can proactively suggest an app based on your open tabs (and also lets you build one from a written prompt) to help you complete whatever task your browsing session is really about.

Instead of just answering questions like a chatbot, GenTabs can assemble an app experience on the fly—like a study visualizer for a topic you’re learning, a meal plan pulled from multiple recipes you’ve opened, or a trip-planning tool while researching travel. Google says these GenTabs draw on your open tabs plus your Gemini chat history, and you can keep refining the app using natural-language commands. Disco is starting small (limited testers via Google Labs), with a waitlist to download on macOS, and Google frames GenTabs as the first of more Disco features to come.

Runway’s Next Leap: World Models + Videos That Finally Talk

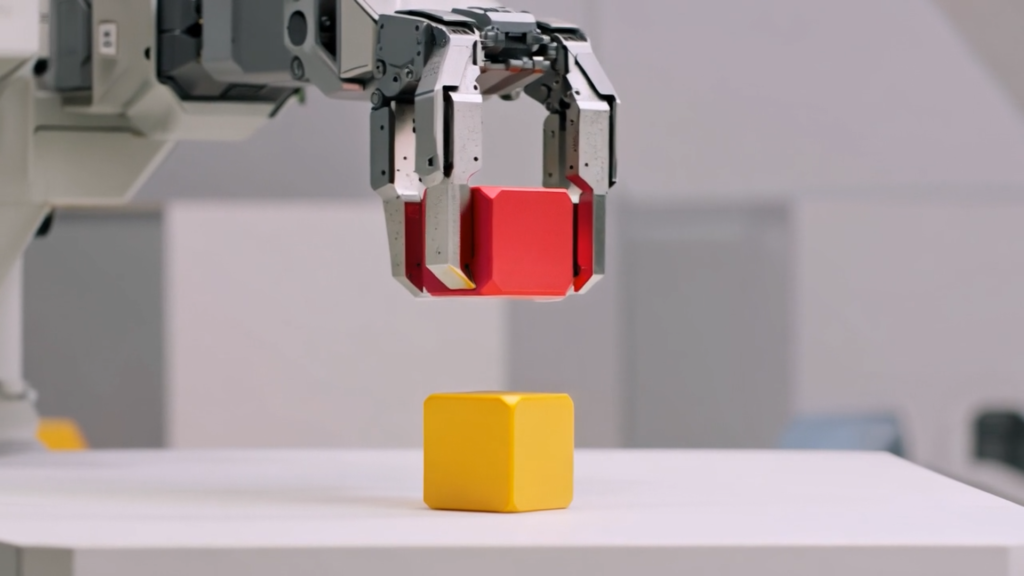

Runway has unveiled GWM-1, its first “world model,” designed to predict video frames step-by-step to simulate environments with a better grasp of physics and how scenes evolve over time. The company is positioning it as a more “general” approach than some rivals, and says it can help generate simulations useful for training AI agents in areas like robotics and life sciences. It’s launching three specialized variants: GWM-Worlds (interactive scene exploration from a prompt or image reference), GWM-Robotics (synthetic data for robotics scenarios like weather/obstacles and potential policy-violation situations), and GWM-Avatars (realistic human-behavior simulations for communication and training).

At the same time, Runway is upgrading Gen 4.5 with native audio and long-form, multi-shot generation, aiming to make outputs feel more production-ready: think up to one-minute videos with consistent characters, dialogue, background audio, and more complex multi-angle shots—plus tools to edit existing audio and add dialogue. The update is positioned as a step toward all-in-one video creation suites, and it’s available to paid-plan users.