Tesla’s Robotaxi Reveal: Unpacking the Buzz Around the Digital Eye

The recent Tesla Robotaxi invite email has sparked intriguing conversations among enthusiasts, particularly regarding the eye-like digital graphic featured prominently in the design. This unconventional choice has led to diverse interpretations within the community, with many questioning why Tesla opted for this imagery over a visual representation of the Robotaxi itself. While the company has successfully concealed much of the vehicle’s design, including heavily disguised prototypes spotted at Warner Bros. Studio, anticipation continues to build as glimpses of the interior were shared in a recent official video. The digital eye, with its potential symbolism, hints at a future where AI and robotics play an integral role in daily life, reinforcing Tesla’s positioning in the emerging landscape of artificial general intelligence.

As the October 10th event approaches, the “We, Robot” slogan captures the essence of collaboration between humans and machines. Tesla aims to disrupt traditional ride-sharing models with its Cybercab, leveraging advancements in autonomous technology and the success of its Full Self-Driving features. Speculation abounds about whether Tesla will sell the new Robotaxi or retain ownership to maximize revenue, a shift from earlier plans involving leased Model 3s. With a focus on a purpose-built, two-seater design, this reveal promises to be one of the most significant moments in automotive history, setting the stage for a transformative era in transportation.

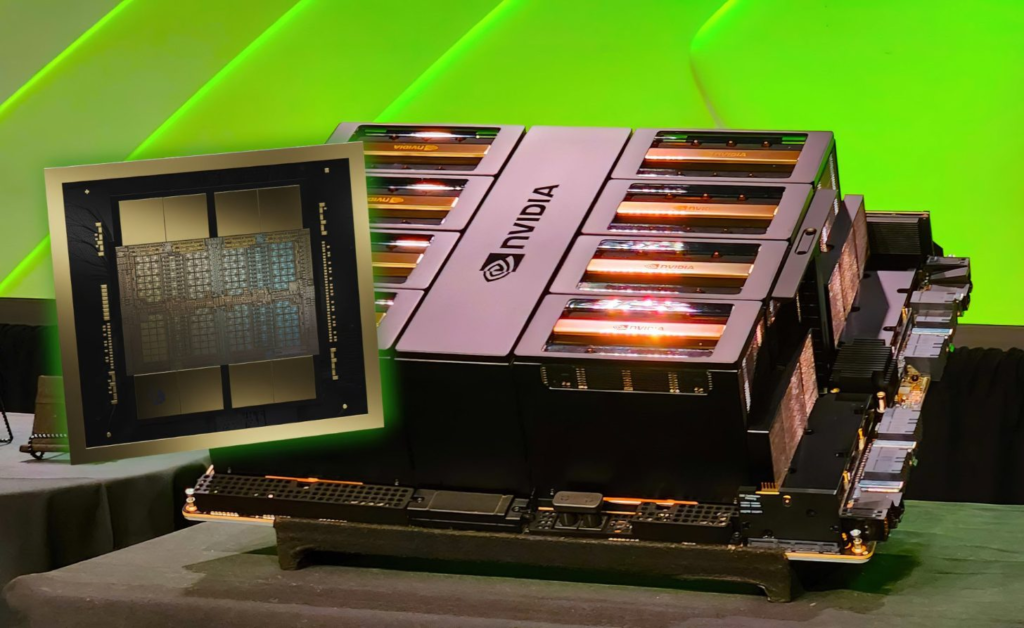

NVIDIA’s Blackwell Architecture: Revolutionizing OpenAI’s o1 LLM with Unmatched Inferencing Power

NVIDIA’s latest Blackwell architecture is set to transform the landscape of artificial intelligence by powering OpenAI’s groundbreaking o1 LLM model. In a recent announcement, NVIDIA CEO Jensen Huang highlighted the architecture’s staggering 50x uplift in inferencing capabilities, marking a significant advancement in AI technology. As the industry shifts from generative AI hype to models that emphasize reasoning and inference, the o1 model is designed to produce responses that closely mimic human thought processes. This evolution is crucial for progressing toward artificial general intelligence, underscoring the growing demand for enhanced computational power in AI development.

The implications of Blackwell’s capabilities extend beyond OpenAI; it signifies NVIDIA’s pivotal role in shaping the future of AI technology. The architecture is expected to become a cornerstone for major firms, including Meta, Microsoft, and Amazon, as they seek to harness its power for their own AI initiatives. With the Blackwell products anticipated to hit the market between Q4 2024 and Q1 2025, the stage is set for a new era in AI development, where advanced reasoning-focused LLMs redefine human interaction with machines. As NVIDIA continues to lead the charge in this technological evolution, the collaboration with OpenAI signals exciting possibilities for both AI and society at large.

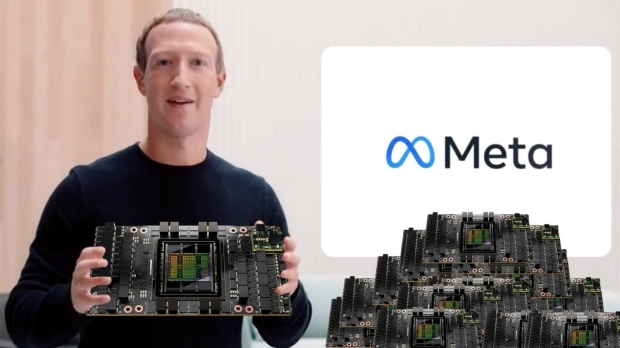

Meta Nears Completion of 100,000+ NVIDIA H100 AI GPU Supercomputer for Llama 4

Meta Platforms is on the brink of launching a groundbreaking AI supercomputer featuring over 100,000 NVIDIA H100 AI GPUs, designed to train its upcoming Llama 4 model. According to recent reports, the supercomputer is expected to be fully operational by October or November, marking a significant milestone in Meta’s AI ambitions. The new cluster, strategically located in the U.S., signifies Meta’s commitment to advancing its artificial intelligence capabilities through substantial investment, with the GPU chips alone reportedly costing over $2 billion.

This colossal supercomputer not only showcases Meta’s dedication to pushing the boundaries of AI but also highlights its robust partnership with NVIDIA. In a conversation between NVIDIA CEO Jensen Huang and Meta CEO Mark Zuckerberg, it was noted that Meta has accumulated a total of over 600,000 H100 GPUs, emphasizing the company’s role as a major customer in NVIDIA’s ecosystem. As Meta prepares to leverage this powerful supercomputing infrastructure for the development of Llama 4, the tech landscape anticipates new innovations and advancements in AI that could reshape how we interact with technology.

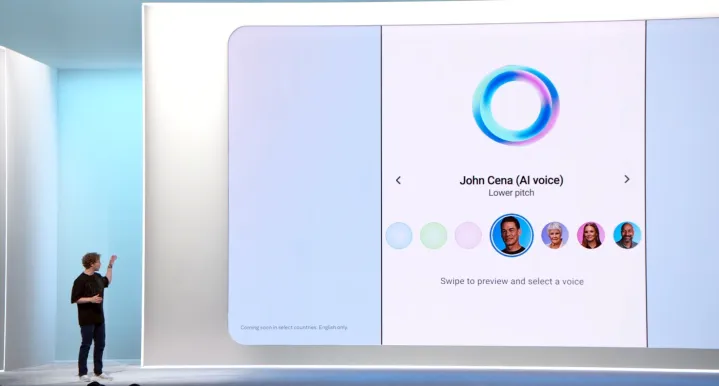

Meta Unveils Advanced Voice Interactions at Connect 2024

At Meta Connect 2024, CEO Mark Zuckerberg introduced a groundbreaking feature called Natural Voice Interactions, positioning it as a direct competitor to Google’s Gemini Live and OpenAI’s Advanced Voice Mode. Zuckerberg emphasized the importance of voice as a more natural interface for AI, stating, “I think it has the potential to be one of the most frequent ways that we all interact with AI.” The feature is set to roll out across Meta’s major apps—including Instagram, WhatsApp, Messenger, and Facebook—allowing users to engage with AI conversationally, bypassing traditional text prompts. Users will also be able to choose from various celebrity voices, enhancing the personalized experience.

In addition to Natural Voice Interactions, Zuckerberg announced updates to the Llama model, which has now reached version 3.2. This version introduces multimodal capabilities, allowing it to interpret charts and images while generating captions. However, these advancements will not be available in Europe due to regulatory concerns regarding data usage. Instead, Meta will offer lighter versions of the Llama model, specifically designed for mobile devices. Zuckerberg also mentioned a new feature, “Imagined for You,” which will integrate AI-generated images into users’ feeds, aiming to enrich the content experience. With these innovations, Meta is clearly investing heavily in AI, striving to position itself as a leader in the evolving landscape of artificial intelligence.