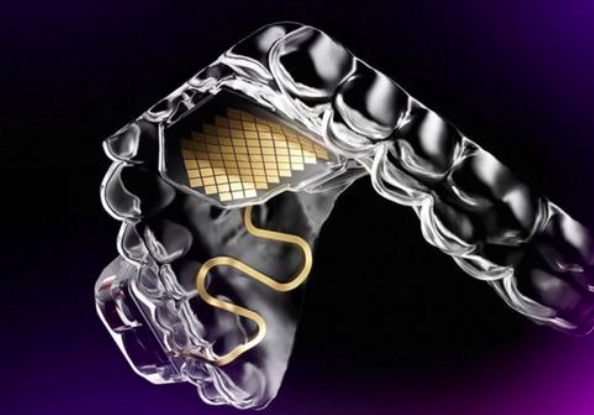

MouthPad: Revolutionary Touchpad for Individuals with Paralysis

Augmental, an MIT startup, has introduced the MouthPad, a touchpad that allows people with paralysis to control devices using tongue and head movements. This pressure-sensitive touchpad, positioned on the roof of the mouth, translates these gestures into real-time cursor actions via Bluetooth. Designed for personalized fit, the MouthPad significantly enhances independence for users with spinal cord injuries, enabling seamless interaction with smartphones, tablets, and computers. Augmental aims for FDA clearance to expand its functionality and accessibility

Tomás Vega, co-founder and CEO of Augmental, was driven by his passion for human augmentation and personal experiences overcoming speech challenges. With a background in assistive technology development, Vega collaborated with Corten Singer to create a practical solution that meets immediate needs. The MouthPad’s 3D-printed, custom-fitted design uses dental-grade materials, ensuring both comfort and precision for users. By leveraging the brain’s natural capacity to control tongue movements, this innovative device offers a versatile interface adaptable to various motor control abilities.

Beyond enhancing daily interactions, the MouthPad has the potential to revolutionize human-computer interaction for a broader audience, including gamers and programmers. Augmental’s vision includes integrating more advanced features like controlling wheelchairs and robotic arms, furthering the independence of individuals with severe impairments. With continued development and anticipated regulatory approvals, the MouthPad stands to become a pioneering tool in the realm of assistive technology, transforming lives and setting new standards for accessibility.

Kling AI: A New Challenger in Text-to-Video Generation

Kling AI, developed by Chinese company Kuaishou, is a revolutionary text-to-video generative model that competes with OpenAI’s SORA. This AI can create highly realistic 1080p videos up to 2 minutes long, featuring accurate physical simulations, lifelike expressions, and complex bodily movements. Built on the Diffusion Transformer architecture and 3D VAE technology, Kling AI allows users to produce diverse video content by transforming textual prompts into immersive visual experiences. Though currently in beta, it promises to advance the video generation industry significantly.

Kling AI’s standout features include its ability to replicate realistic expressions and movements, create videos in various aspect ratios, and support large-scale motion modeling. Its impressive video outputs, such as a panda playing the guitar or a cat driving, highlight its potential to bring creative and imaginative scenarios to life. The model’s capacity for generating lengthy, dynamic videos positions it as a formidable competitor to existing AI video models, pushing the boundaries of what is possible in generative AI technology.

Kling AI poses a significant challenge to SORA with its superior video generation capabilities. The ability to produce 2-minute long, 1080p videos with realistic physics and detailed expressions gives Kling AI a substantial edge. While SORA focuses on efficient real-time simulations, Kling’s use of 3D VAE and variable resolution training allows for more dynamic and lifelike video outputs. Additionally, Kling’s flexibility in aspect ratios and its ability to handle large-scale motions make it adaptable to various creative requirements, further enhancing its appeal over SORA.

Humanoid Robot Conquers the Great Wall: A Milestone in AI Robotics

Robot Era, a Chinese robotics company, has made headlines with its AI-powered humanoid robot, XBot-L, which recently climbed the Great Wall of China. Standing 1.65 meters tall, XBot-L demonstrated impressive locomotion, dexterity, and balance, thanks to its perceptive reinforcement learning algorithms. These advanced algorithms enable the robot to navigate uneven terrains and adapt its walking stance in real-time, showcasing significant strides in embodied AI technology. Despite minor setbacks, this achievement marks a significant milestone in the field of humanoid robotics.

Developed by Robot Era, incubated by Tsinghua University’s Institute of Cross-disciplinary Information Sciences, XBot-L integrates high-torque-density modular joints and an advanced structural design using materials like high-strength alloys and carbon fiber. These innovations enhance the robot’s strength and stability while maintaining an attractive appearance. The company’s focus on advanced force control algorithms and a large language model allows its robots to better understand and interact with humans, pushing the boundaries of what humanoid robots can achieve in both domestic and industrial settings.

Eve Humanoid Robot Update: Seamless Multi-Tasking with Voice Commands

1X Technologies has upgraded its humanoid robot, Eve, to perform multiple tasks sequentially through voice commands. The new voice-controlled natural language interface allows users to instruct Eve to complete a range of related tasks autonomously. Demonstrated in a video, Eve can tidy up an office by picking up objects and wiping tables clean. This advancement integrates multiple processes into a single neural network, improving the robot’s ability to handle complex task sequences efficiently, making it more versatile for both domestic and industrial applications.

The upgrade addresses the challenge of chaining multiple robot skills in sequence, where each successive task must adapt to the variations left by the previous one. By merging single-task models into goal-conditioned models, Eve now operates seamlessly, abstracting the complexity from the user and providing a powerful, unified model. Supported by OpenAI, 1X Technologies continues to push the boundaries of robotic capabilities, enhancing Eve’s functionality as a reliable assistant in various settings.

Perplexity AI Launches Feature to Transform Searches into Shareable Pages

Perplexity AI has introduced Perplexity Pages, enabling users to create detailed, visually appealing reports, articles, or guides from search queries. Users can specify the audience type and customize sections by adding, rewriting, or removing content. This tool integrates relevant media and makes pages publishable and searchable via Google. The feature, aimed at curating rather than generating content, emphasizes user control over AI assistance. Currently, Perplexity Pages are available to a limited number of users and will be expanded in the future.

Henry Modisett, Perplexity’s head of design, highlighted the company’s goal of leveraging their core technology to create a more shareable and presentable research tool. He noted that the feature enables users to curate and organize information, allowing a significant human touch in creating engaging content. While the AI helps generate initial responses, the final product relies heavily on user input, ensuring that each page reflects individual preferences and decisions. The feature aims to balance AI efficiency with human creativity, potentially reshaping how we approach information curation on the web.